The following citation, in summary at the top, with more detail below, are for a large financial service firm. More about this time can be read in Balancing Act: A Practical Approach to Business Event Based Insights, Chapter 21. ERP Reporting and Chapter 35. Model the Repository.

Insurance Company Manages Very Large Data Warehouse

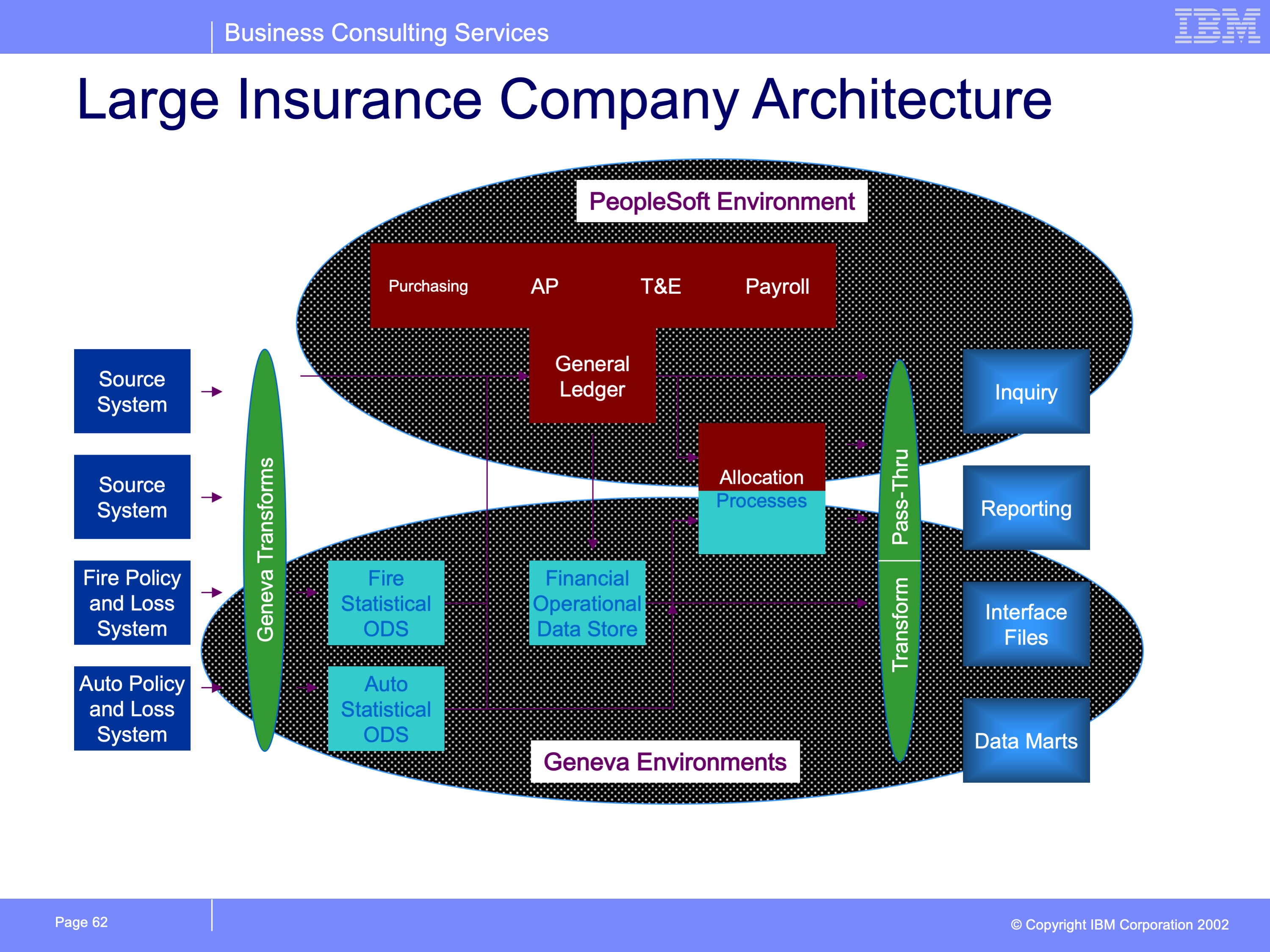

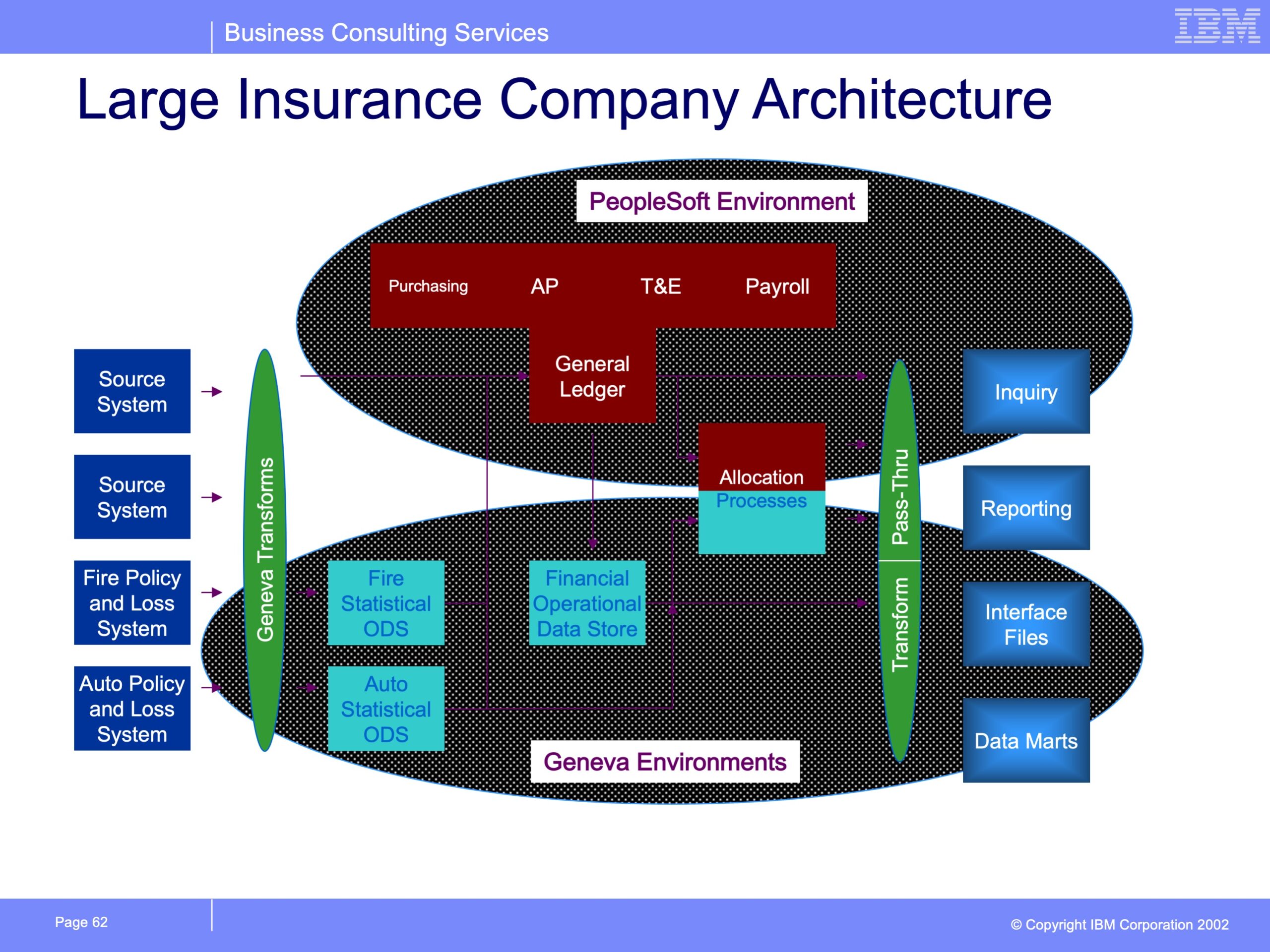

Three years ago, a Fortune 100 Insurance Company embarked upon a “ Data Warehouse Program” to “build a single consistent common source of financially balanced data to be used by multiple business users for decision support, data mining, and internal/external reporting.” A major component of this information architecture is the construction of multiple Operational Data Stores or ODS’s. These ODS’s may contain up to 10 years of event level or detailed transaction history as the basis for populating other data warehousing applications. The company uses Geneva ERS to load and extract data from these data stores.

Data is loaded into these repositories nightly. In some cases Geneva ERS is used to extract data from operational source systems. “Source systems” vary from the PeopleSoft Journal Lines DB/2 table to legacy flat files. The DB/2 extract process reads 80 physical DB2 partitions in parallel, scanning over 80 million records in approximately 10 minutes.

This extracted data is edited using a combination of custom Geneva ERS processes and stand-alone custom programs. After the data is edited it is loaded into the repositories in parallel by Geneva ERS. The number of records loaded varies by repository, from 100,000 financial transactions (journal lines) to more than 1,000,000 policy transactions. In total, approximately 2 million transactions a day are added to the repositories.

In the same pass of the data that loads the repositories, Geneva ERS also produces multiple reports and extract files for use by business users and other application systems. One of the repositories is “backed up” by Geneva ERS at the same time. This daily execution of Geneva ERS reads over 450 million records in 23 different entities and 220 physical partitions. It writes over 900 million physical records to over 800 files. This process has run in 1 hour 3 minutes of wall clock time and 3 hours of CPU time.

Other executions of Geneva ERS extract data on a daily, weekly, monthly and annual basis. The output from one execution creates an executive information drill down file accessed via the company Intranet. This web site is accessible by over 300 business users.

The Geneva “Views” executed in all of the above processes are maintained within the Geneva ViewBuilder software. This includes record structures, field types, relationships between structures, and the actual queries themselves. Many queries are very similar to programs in their sophistication and contain complex business logic. Some have over 900 column definitions. These views also utilize custom code for accessing certain files and executing business logic requiring a programming language. Over 100 people have been trained on programming using Geneva ERS, and the company has had up to 10 testing environments at one time.

The most sophisticated use of Geneva ERS emulates a PeopleSoft financial cost allocation process. Custom programs were developed which generate over 6,000 Geneva ERS views based upon over 7,000 PeopleSoft rules. Geneva ERS executes these views to scan the financial repository selecting records eligible for allocation. It then allocates these costs through four allocation layers, such as products, and geographical units. At 1999 year-end, this process read over 50 million records selecting nearly 3 million that were eligible for allocation. These 3 million records were exploded into over 290 million virtual allocation records, of which 186 million summarized records were written to physical files. The process runs in 7½ hours wall clock time and 28½ hours of CPU time.

Financial Services Company Simplifies Complex Processes

The Problem

Many financial service organizations were early adopters of computer technology. They quickly constructed systems to automate numerous processes. Programs were often added to the fringe of existing processes to solve new problems or provide an additional report or file for analysis. The need to keep multiple types of books and fulfill regulatory reporting requirements added to the complexity of some of these systems. These systems grew before modularization, subsystem definition, or other computing concepts were developed. Furthermore, the number of transactions generated by these organizations always challenged computing capacity. This required creative and complex solutions. Over time these systems metamorphosed into closely intertwined, interconnected, and inflexible systems.

In 1996, a Fortune 100 Insurance Company determined their reporting system was so inflexible that it would become unsupportable in the near future. They decided “…to build a single, consistent source of financially balanced data to be used by multiple business users for decision support, data mining, and internal/external reporting.” After analyzing their information needs they determined that the best approach to satisfy the broadest number of users was to construct a comprehensive data warehouse environment, including extract transformation processes, Operational Data Stores (ODSs), reporting environments, and data marts. The company viewed the ODSs as the heart of the data warehousing effort. ODSs are designed to contain up to 10 years of detailed transactional history. By storing the transactional detail, the company can satisfy requests for individual transactions, summaries using any field from the transaction detail, or snap shots as of any point in time. This robust environment truly enables the “single, consistent source of financially balanced data.”

Although such a data store simplifies and satisfies all types of information needs, it also means tremendous data volumes. Managing such data volumes requires a creative, industrial strength solution. It also requires people who know how to make it happen. This company went looking for a tool and the people that were up to the challenge. They chose PricewaterhouseCoopers and Geneva ERS.

The Solution

Geneva offers a robust data warehousing solution able to process tremendous amounts of data quickly. It also provides for innovative, flexible solutions that are easily supported by company employees. This company uses Geneva to do the following:

- Source system Extract and Transform

- ODS Load, Query, Interface production

- Detailed Financial Allocation Process

- Executive Information Delivery

Extract and Transform

Extracting from legacy systems can often present a challenge to the most robust tools. Especially when source systems include complex data structures and high data volumes. Legacy systems often were designed to support monthly reporting cycles by accumulating and summarizing data before finally kicking out the hard copy report at the end of the month. Changing such systems to provide more recent information can make the difference between a successful information environment and simply a different format for the same old content. However, making sure the results are right can be a very expensive process.

PwC consultants used a new approach to attack this problem. For this client, they first created a program which understood these complex and unique legacy structures, which could be called by the Geneva ERS open API. This program opened up difficult legacy systems to the power of Geneva. Geneva was used to extract data from multiple sources. The Geneva development environment allows definition of source system data structures. Once defined to Geneva, business logic can be applied. The business logic is stored as a Geneva “View.” The Views are organized by logical frequencies like daily or weekly processes or on request processes. Once the environment was created, they focused on understanding the data structures instead of tracing through the entire legacy process. They used an iterative prototyping approach to discover the source of all the data contained in legacy downstream files. They successfully proved that the system could be converted from a monthly to a daily system. They extracted the source system data and populated the ODSs. The iterative prototyping approach used by PwC consultants shaved months off the delivery cycle and hundreds of man-hours spent in legacy system research.

The power of Geneva is evident in the types of source systems from which data is extracted. In addition to the complex legacy structure noted above, a DB/2 extract process reads 80 physical DB/2 partitions in parallel, scanning over 80 million records in approximately 10 minutes. All processing is completed in a fraction of the time it would take another data warehousing tool.

Load, Query and Interface Production

PwC set out to help create an environment that would allow many users, with diverse information needs to pull the desired information from the repositories. They created data and processing models that allowed all queries to be resolved in a single pass of the transaction detail. These models minimizes data storage requirements by eliminating duplicate data from transactions, but combining the data back together for report production in an efficient manner.

In the same pass of the data that loads the repositories, Geneva ERS also produces multiple reports and extract files for use by business users and other application systems. Many output formats are possible including reports, spreadsheets and files. And because the ODSs contain transaction level detail, users are able to choose what level of detail they wish to see. The data model also allows for use of Geneva’s date effective join capabilities. This enables users to create reports as of any point in time. Summaries can be created using organizational structures from any point in time.

The client choose to construct an ODS to support the ERP General Ledger being installed. However, the coding structure for the new ERP package differed significantly from the historical organization and account coding structure. The ODS supported all interface production, translating from old to new and from new to old. Global utilities were constructed that were called from the Geneva API. Because of Geneva’s ability to process the detailed transactions, all fields could be translated at the lowest level of detail. This enabled consistent answer set production for all interfaces.

The number of records loaded varies by repository, from 100,000 financial transactions (journal lines) to more than 1,000,000 policy transactions. In total, approximately 2 million transactions a day are added to the repositories. The single pass architecture even produces back ups using Geneva ERS in the same pass of the ODS. This daily execution of Geneva ERS reads over 450 million records in 23 different entities and 220 physical partitions. It writes over 900 million physical records to over 800 files. This process has run in 1 hour 3 minutes of wall clock time and 3 hours of CPU time.

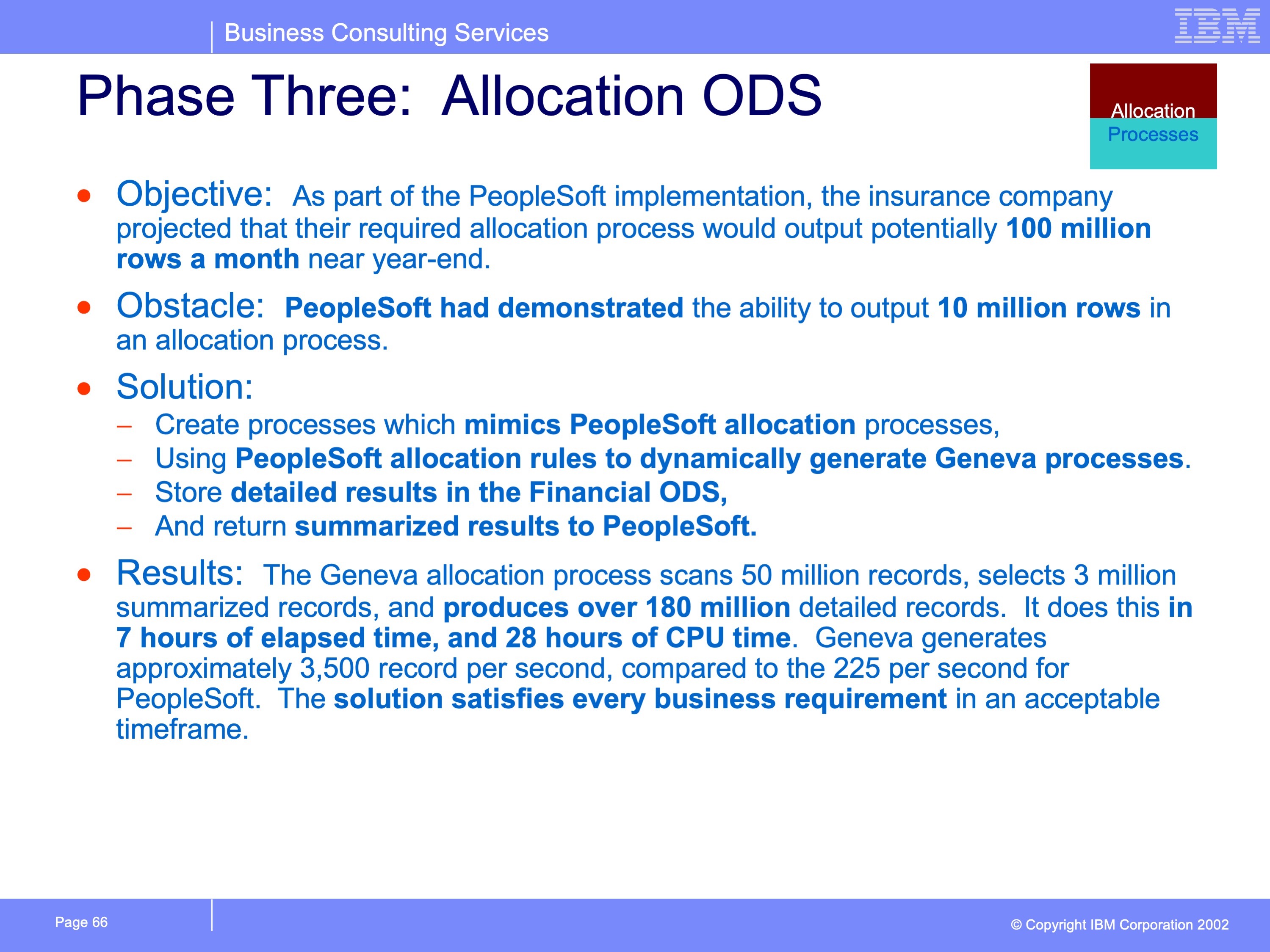

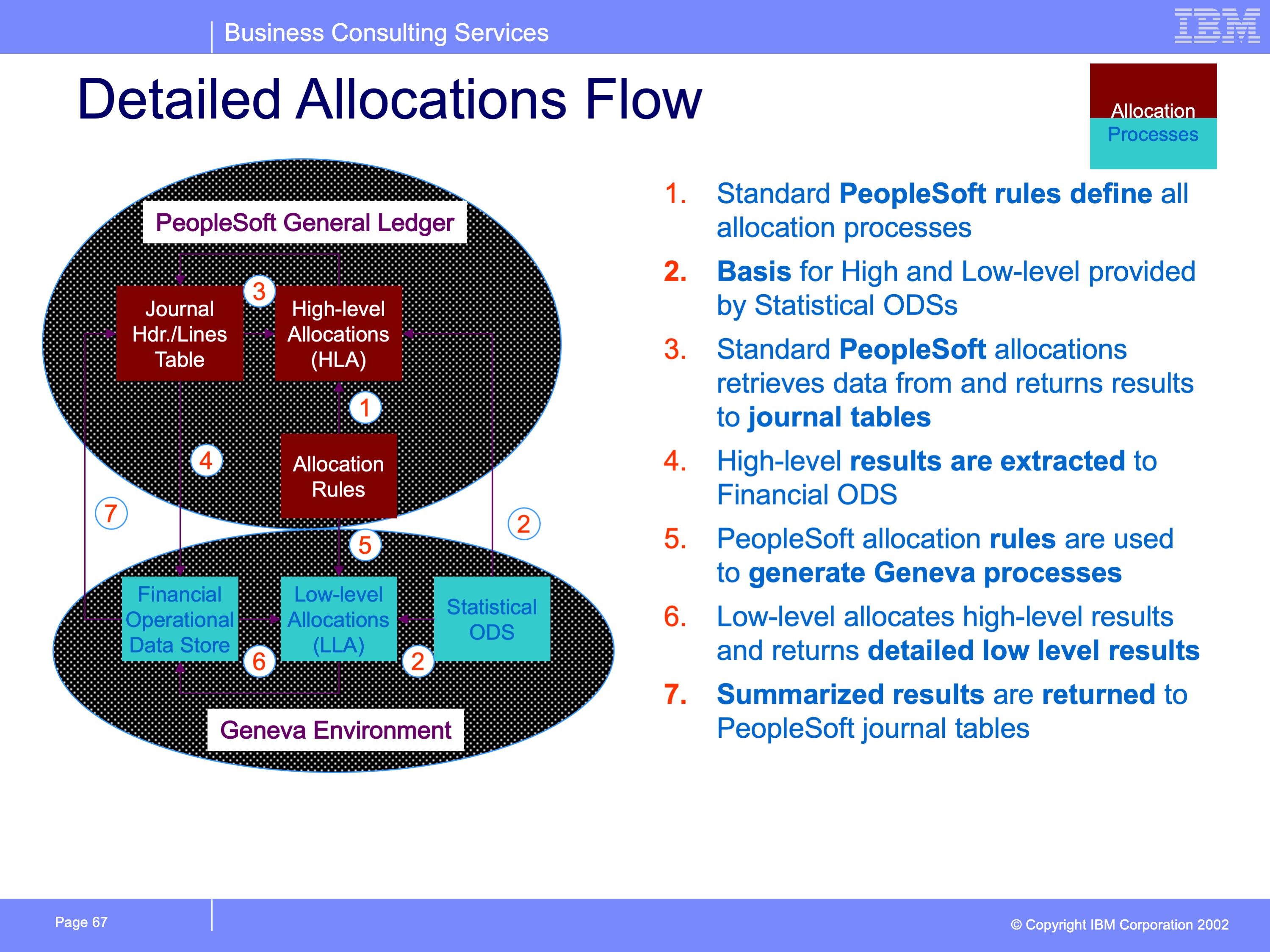

Detailed Financial Allocation Process

The most sophisticated use of Geneva emulates an ERP financial cost allocation process. The insurance company recognized that with their volume of data, the ERP package could not handle the allocation process. They would have to change their business requirements or find another solution. They looked to PwC and Geneva to supply that solution. Client members and PwC consultants analyzed the allocation process and created custom programs which generate over 6,000 Geneva views based upon over 7,000 allocation rules. Geneva executes these views to scan the financial ODS selecting records eligible for allocation. It then allocates these costs through four allocation layers, such as products, and geographical units.

During the first year of implementation, the year-end allocation process read over 50 million records selecting nearly 3 million that were eligible for allocation. These 3 million records were exploded into 186 million allocation results. The process runs in 7½ hours wall clock time. The Geneva system produces these results 250 times faster than the ERP package. Because of this innovative Geneva solution, the business users were able to have their data represented exactly as they wanted.

Geneva ERS Executive Information System

Providing users with on-line access into repositories holding hundreds of millions of detailed records is a significant challenge. The PwC team developed an innovative approach to give users the access, but not compromise the performance of the ODS processes or require massive new processing capacity. The result was the Geneva ERS Executive Information System.

This system uses Geneva to produce summary “views” of the detailed transactions. These summaries were developed within Geneva, and could summarize by any field in the ODS. Approximately 20 different cuts of the data were developed. During the load and query processes, these queries are executed to refresh the summaries from the detail transactions. Because the summaries are always regenerated from the detail, no sophisticated update processes had to be developed and they also always contain the same consistent answer.

Users access the companies Intranet site, and select which summary to view. The Geneva ERS Java applet allows users to drill down within the report to lower and lower levels of detail. Because of the unique structure of the Geneva output file, data access if very efficient. This web site is accessible by over 300 business users

The Result

Most Geneva Views are created and maintained within the Geneva ViewBuilder software. This interface stores table structures, field types, relationships between structures, and the business logic to process the data. Geneva trainers trained over 100 company employees on site on the use of the ViewBuilder, for everything from basic view construction to use of the Geneva API, to key data warehousing concepts.

With PwC assistance the company implemented the first two ODSs. They have now moved on to developing additional warehouses on their own and multiple data marts.

The result has been the ability to replace certain legacy systems with much more flexible architecture. The company has made major strides in meeting it objectives of “…a single, consistent source of financially balanced data to be used by multiple business users for decision support, data mining, and internal/external reporting.” They have been able to create business intelligence in an intelligent way.